Detecting Loose Fasteners on Reflective, Oily Assemblies: Why Traditional Vision Fails—and How AI Vision Solves It

Loose or missing fasteners are a classic latent defect. In automotive engines, gearboxes, braking modules, battery enclosures, or any safety-critical assembly, a single loose bolt can lead to vibration, leak paths, NVH complaints, or catastrophic failure.

The Real Problem (It's More Than "Find the Bolt")

In practice, three realities make automated detection hard:

1. Physics of Loosening

Bolts lose preload under cyclic vibration and shock; micro-rotations at the thread interface and joint settlement reduce clamp force until the joint slips—even if torque at build was nominal. That's "self-loosening," and it's a known, studied mechanism in fastener engineering.

2. Optics of Reality

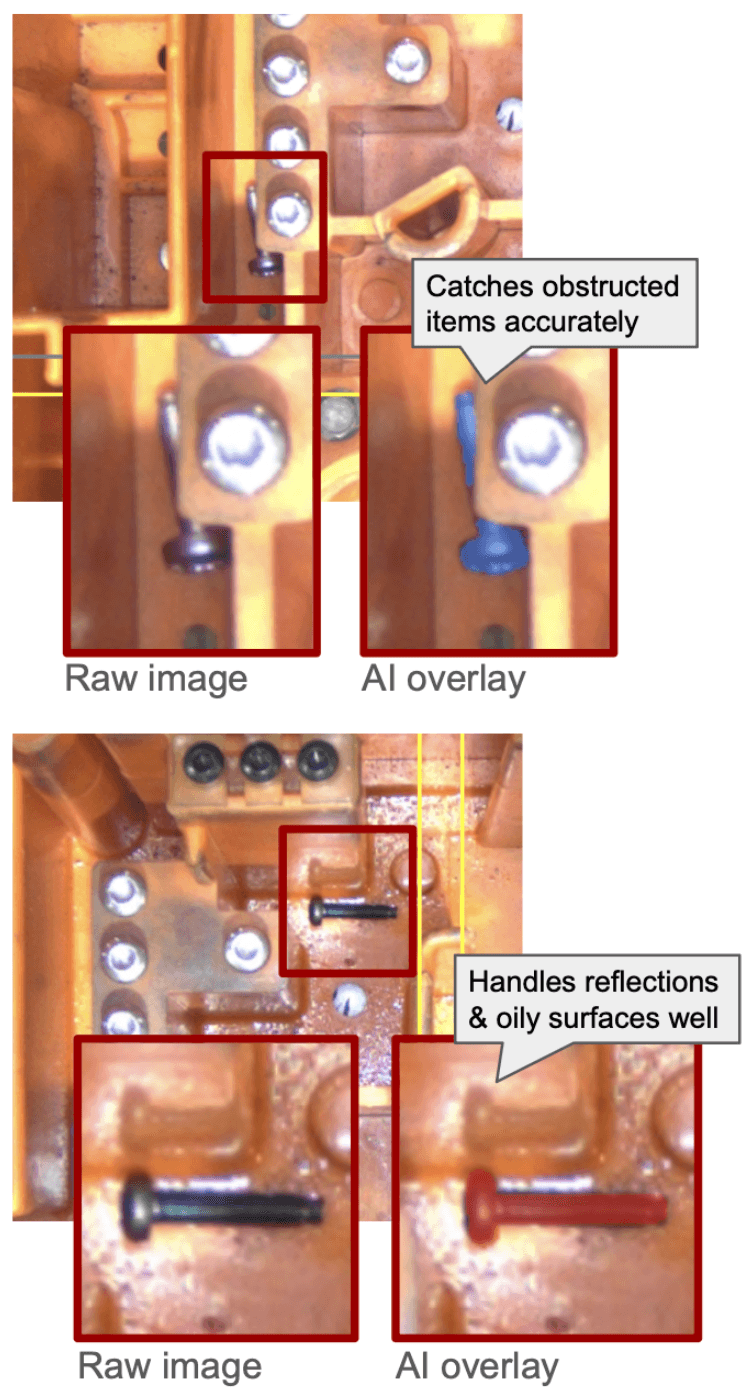

Parts are shiny and oily. Reflections, specular glare, and thin oil films defeat rule-based AOI; thresholds trip on glare, not geometry. Coaxial/telecentric optics are often required to "see through" reflection and preserve true feature contrast.

3. Geometry in the Wild

Fasteners vary in finish and color; they're partially occluded by ribs/flanges; orientations change across kits. Perspective tilt alone can break simple templates—unless you correct it or use models that generalize across pose.

Layer on Fastener Assurance System (FAS) expectations and compliance frameworks (e.g., Fastener Quality Act traceability), and you need inspection that's repeatable, data-rich, and auditable—not "best effort."

Why Legacy AOI Underperforms Here

- •Threshold brittleness. Specular highlights cause false positives; shadowing causes misses.

- •Color/finish drift. Dual-finish or coated hardware breaks color-seg rules.

- •Hidden visibility. Bolts tucked behind edges, clamps, or hoses are intermittently visible.

- •Perspective distortion. Camera angles change; templates don't.

How Overview AI Solves It (Production-Ready, Not Lab-Only)

1. Make the Image "Truthful" with the Right Optics

We match the field of view and working distance with telecentric lenses to keep magnification constant and edges true, then add coaxial (on-axis) lighting to suppress glare. This combination reveals real geometry (head flats, washer edges, shank silhouette) while de-emphasizing specular noise.

2. Learn Features—Not Thresholds—with Segmentation

Instead of asking, "Is this bright blob a bolt?", our segmentation recipe learns the shape + texture of a fastener and its context. That lets the model:

- ✓detect fasteners in obstructed or partially visible views,

- ✓ignore glare/oil artifacts, and

- ✓handle multiple finishes and colors in one recipe.

3. Train Fast, at the Edge, and Iterate On-Line

Using the OV80i, training happens on-device (NVIDIA Orin NX). Typical segmentation recipes go from first images → validated model in hours, not weeks—so you can adapt when fixtures, finishes, or lighting change. Learn more about our fast implementation process.

4. Operationalize with Logic + Data

We pair AI output with lightweight logic (voting, masking, confidence thresholds) so the cell PLC gets deterministic signals (EtherNet/IP/PROFINET), and QA gets traceable FAS-friendly artifacts (images, masks, timestamps) for audits and continuous improvement.

What "Good" Looks Like on the Line

State-of-the-Art Accuracy

Detecting present/missing or loose fasteners under reflective/oily conditions.

Stable False-Alarm Rates

By design (glare-robust optics + learned features + confidence logic).

Short Time-to-Value

Capture a small, representative set; label in the browser; train; validate on shift.

Compliance-Ready

Images + overlays + metadata support FAS and customer PPAP/traceability needs.

FAQs

Can AI vision really "see" through oil and glare on metal?

With coaxial lighting and proper lenses, speculars are suppressed and edges resolved; segmentation then learns geometry/texture, not brightness. That's the winning combo.

We have mixed finishes and colors—do we need separate recipes?

Often no. One segmentation recipe can learn multiple finish classes if you seed training with a modest but representative set from each.

How many images are needed?

Start small (dozens), validate in "shadow mode," then add edge cases. With on-device training, each iteration is minutes to ~1–2 hours (OV80i).

How do you prove to customers that fastener assurance is covered?

We store per-part images, masks, and decision logs to support Fastener Assurance Systems (FAS) and FQA/contractual audit trails.

See It in Action

Explore the OV80i and ask us about fastener detection packages for your FAS and PPAP requirements.