- 25 Nov 2024

- Print

- PDF

Using the Segmenter

- Updated on 25 Nov 2024

- Print

- PDF

Note

Download the sample recipe here.

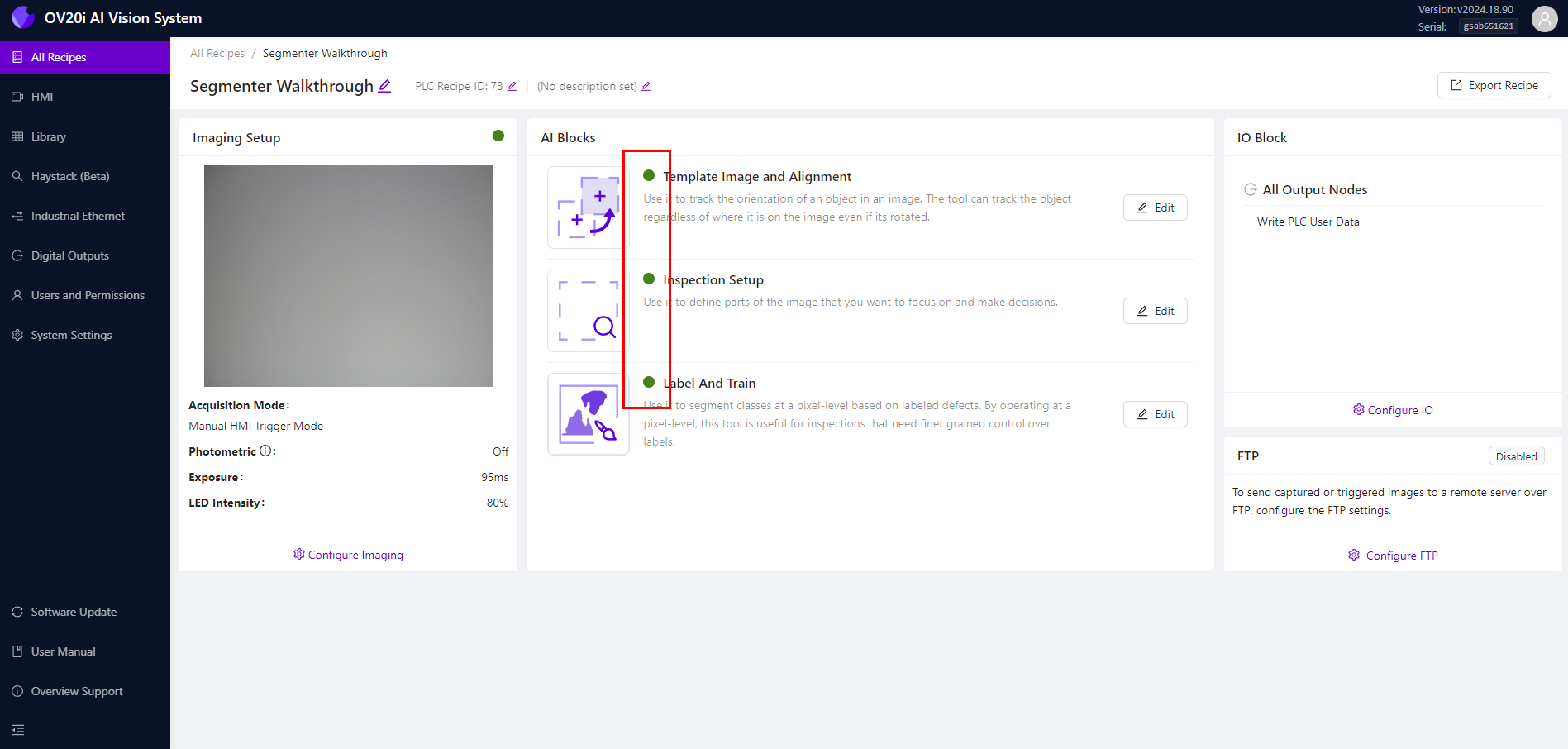

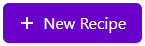

From the All Recipes page, click + New Recipe in the top-right corner.

The Add A New Recipe modal will appear. Enter a Name for the Recipe (required) that reflects the specific application you are working on and select Segmentation from the Recipe Type down-down menu. Click OK to create the new Recipe.

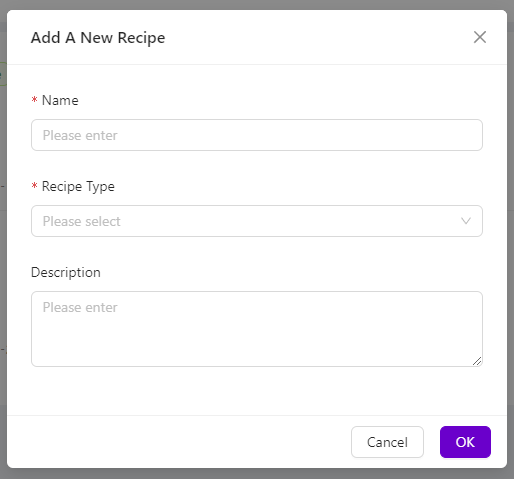

The new Recipe will be listed on the All Recipes page (Inactive). Select Actions > Activate to the right-hand side of the Recipe. Then click Activate to confirm.

Click Edit to initiate the process of creating your first Segmenter model. Then click Open Editor to confirm.

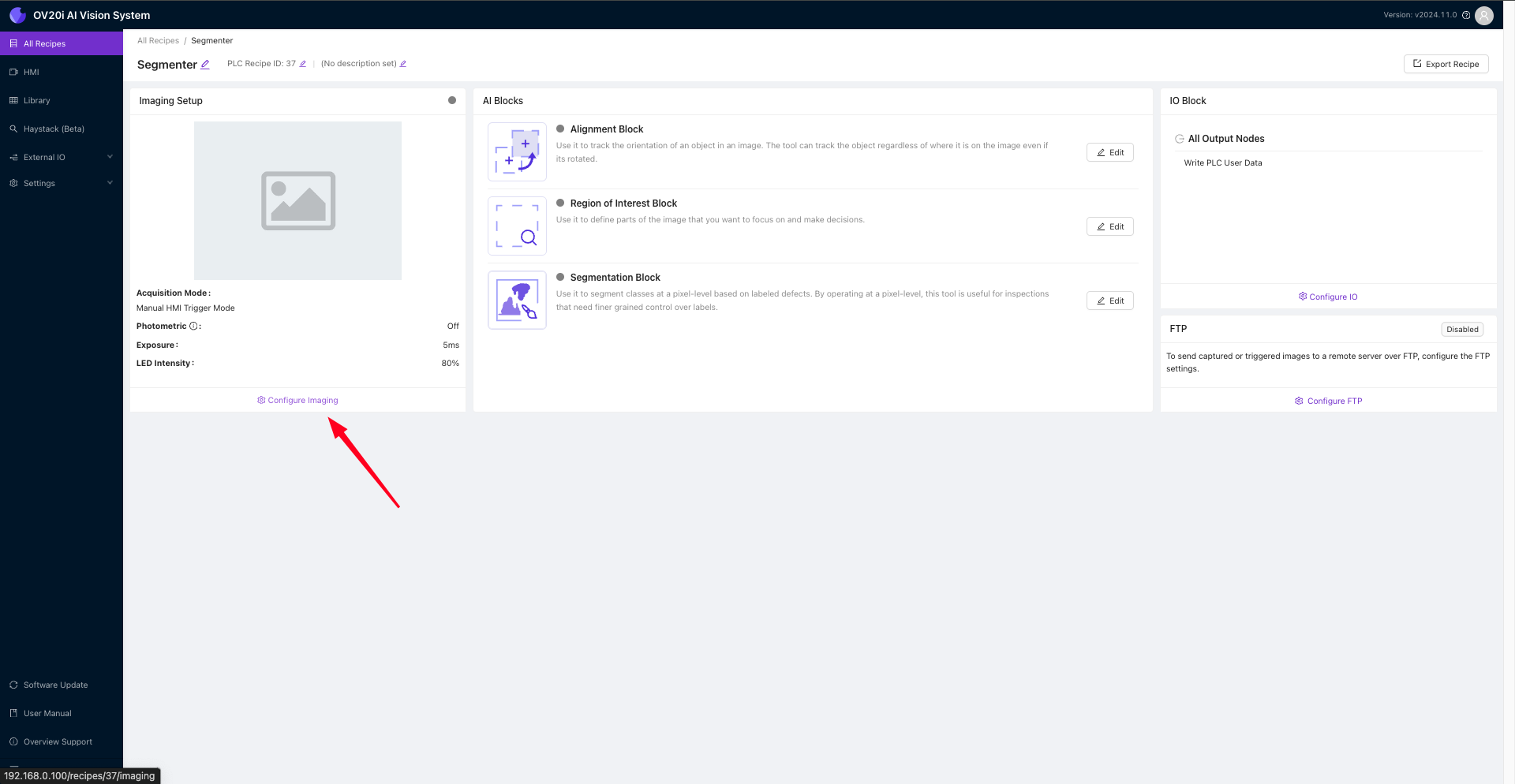

Click Configure Imaging at the lower left-hand side of the page to begin setting up your OV20i camera for this application.

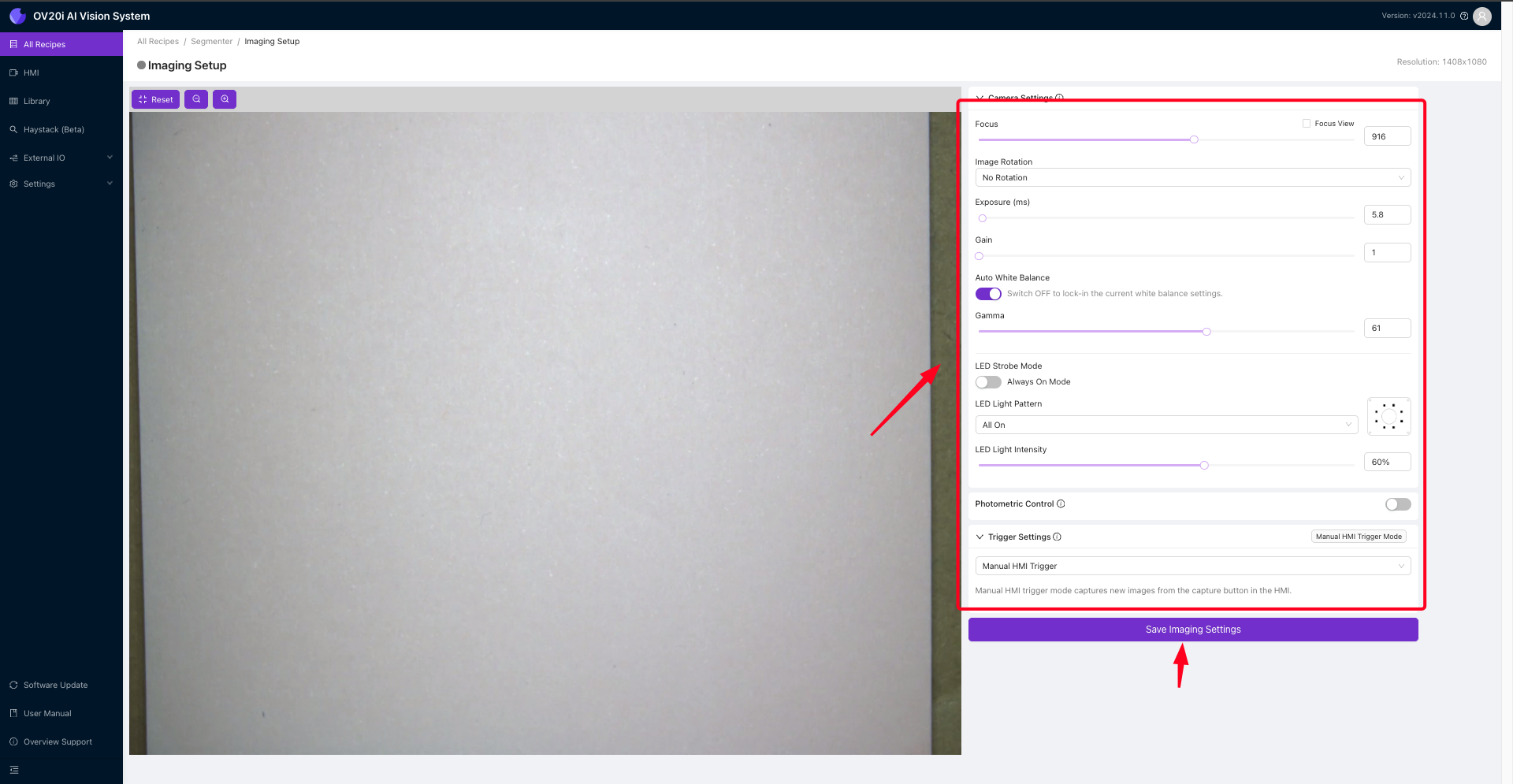

When setting up the camera, it's essential to take the time to configure all of the camera settings correctly. This includes focusing the camera on the region of interest, which is the specific area of the image that contains the object or feature you want to analyze. You can adjust the Focus using the slider or enter the value manually.

Another critical camera setting to get right is the Exposure, which controls how much light enters the camera. You can adjust the Exposure using the slider or enter the value manually.

Optimizing lighting conditions is also crucial for obtaining accurate and reliable results. You need to make sure that the lighting conditions are appropriate for the type of analysis you want to perform. For example, if you're analyzing a reflective surface, you may need to use the lighting to avoid glare or reflections. This can be selected under the LED Light Pattern. In addition to these camera settings, you can configure in-house designed lights for the camera and obtain various patterns to identify defects that may be visible under different reflective conditions.

Getting the Gamma just right is also important. Gamma is a measure of the contrast between the light and dark areas of an image. Adjusting the Gamma correctly can help you see more detail in the image and make it easier to identify defects or features of interest.

Once all of these settings are configured, simply hit Save Imaging Settings to apply them and start using the camera for your analysis.

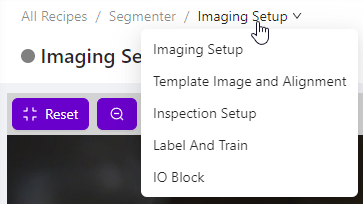

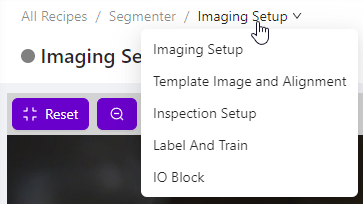

Next, navigate to Template Image and Alignment.

Navigation Tip

Click on the Recipe Name in the breadcrumb menu to return to the Recipe Editor or use the drop-down menu to select Template Image and Alignment.

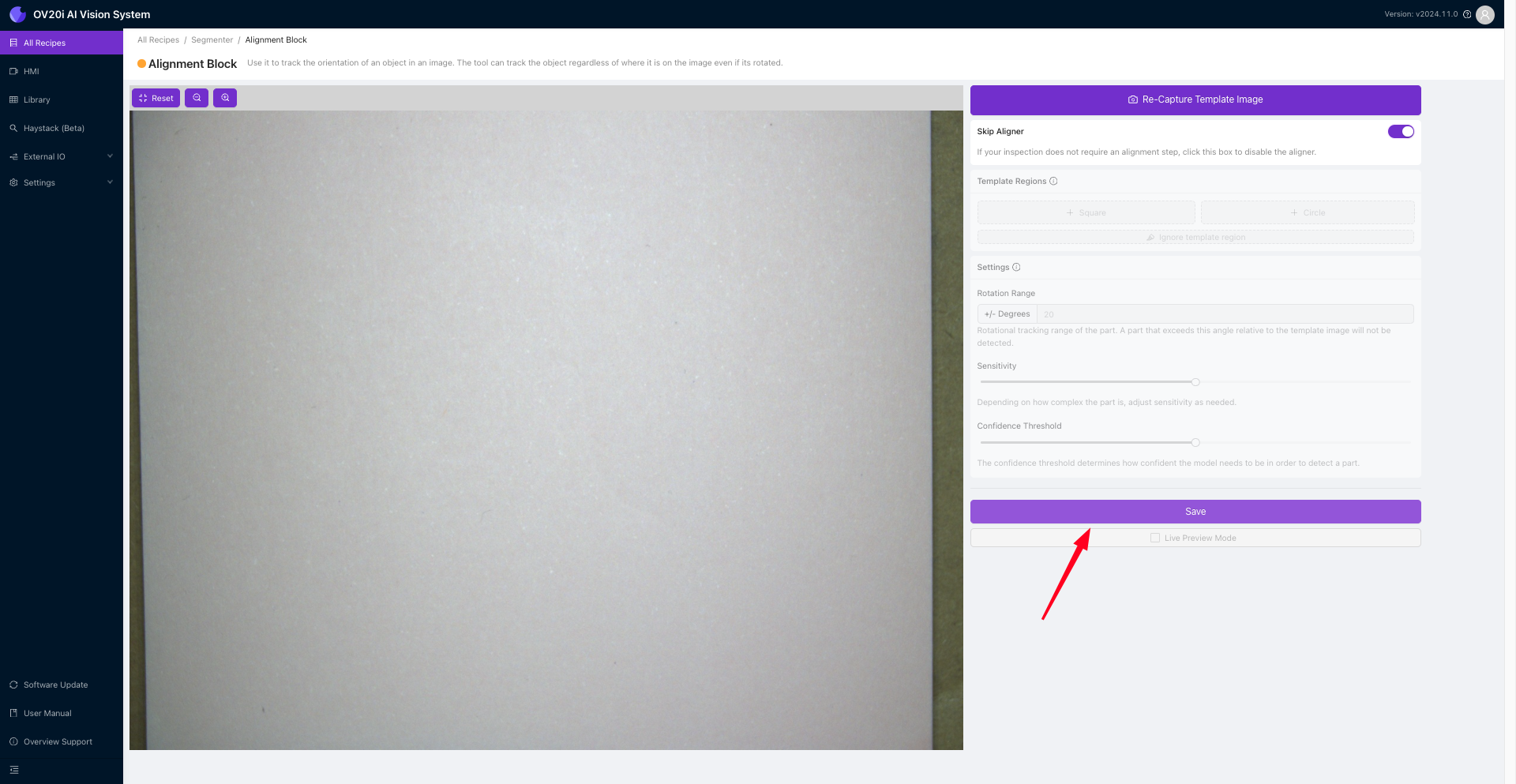

Once you are on the Template Image and Alignment page, you can capture a template image and align the page to your desired condition. However, since you don't require this step for your current task, select Skip Aligner. Once you have made any necessary adjustments, simply click Save to apply the changes and move on to the next step.

Next, navigate to Inspection Setup.

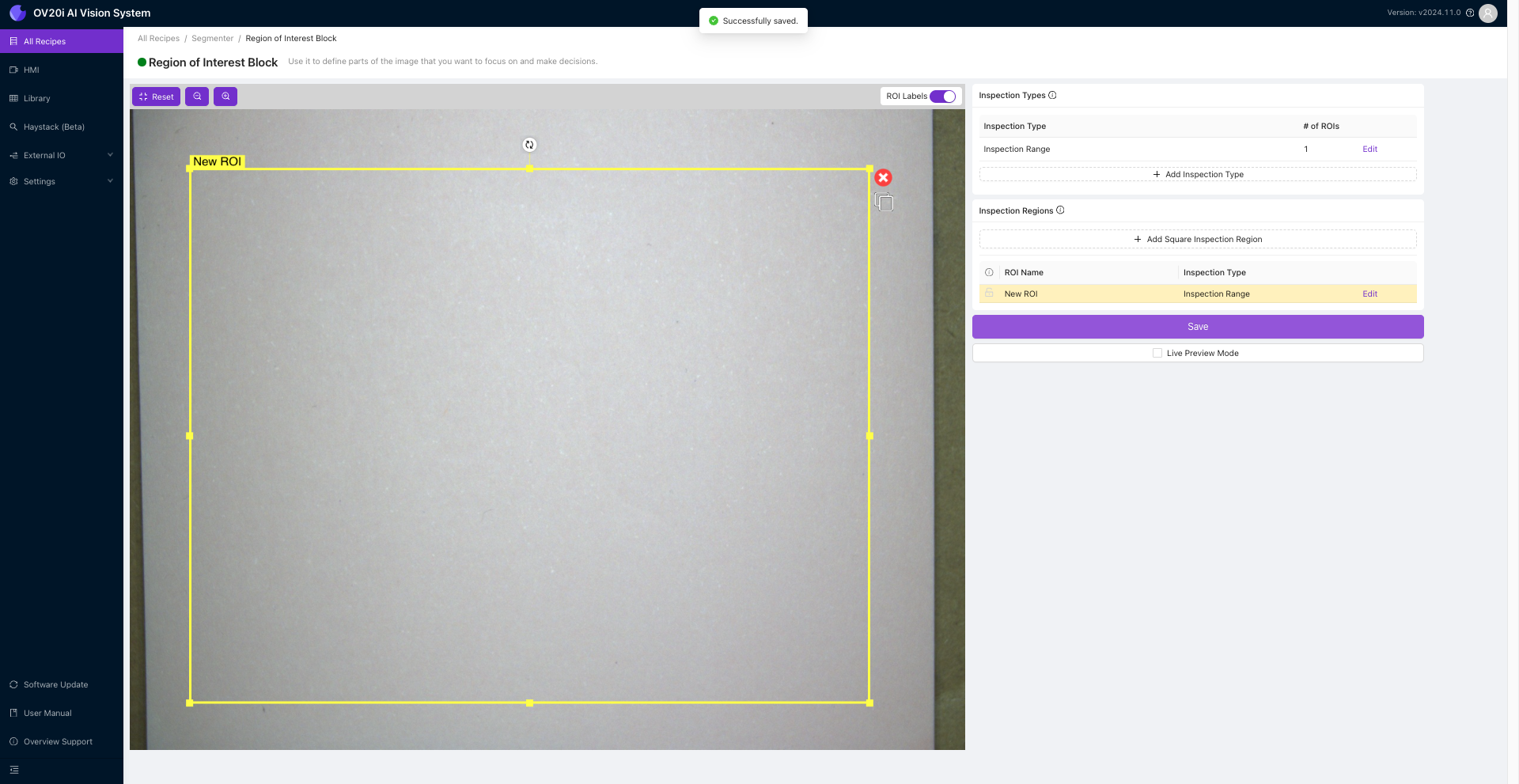

For this particular case, the inspection will be focused on the sheet. However, you can select a different inspection type for your specific use case and adjust the inspection region accordingly.

Once you have selected the appropriate inspection type, you can adjust the Region of Interest (ROI) to ensure that the camera is focused on the correct area. This can be done by dragging the corners of the ROI box to adjust its size and position. It's crucial to ensure that the ROI is correctly aligned with the object you want to analyze to obtain the most accurate results.

Once you have adjusted the ROI, simply hit Save to apply the changes and continue with the inspection process.

Next, navigate to Label And Train.

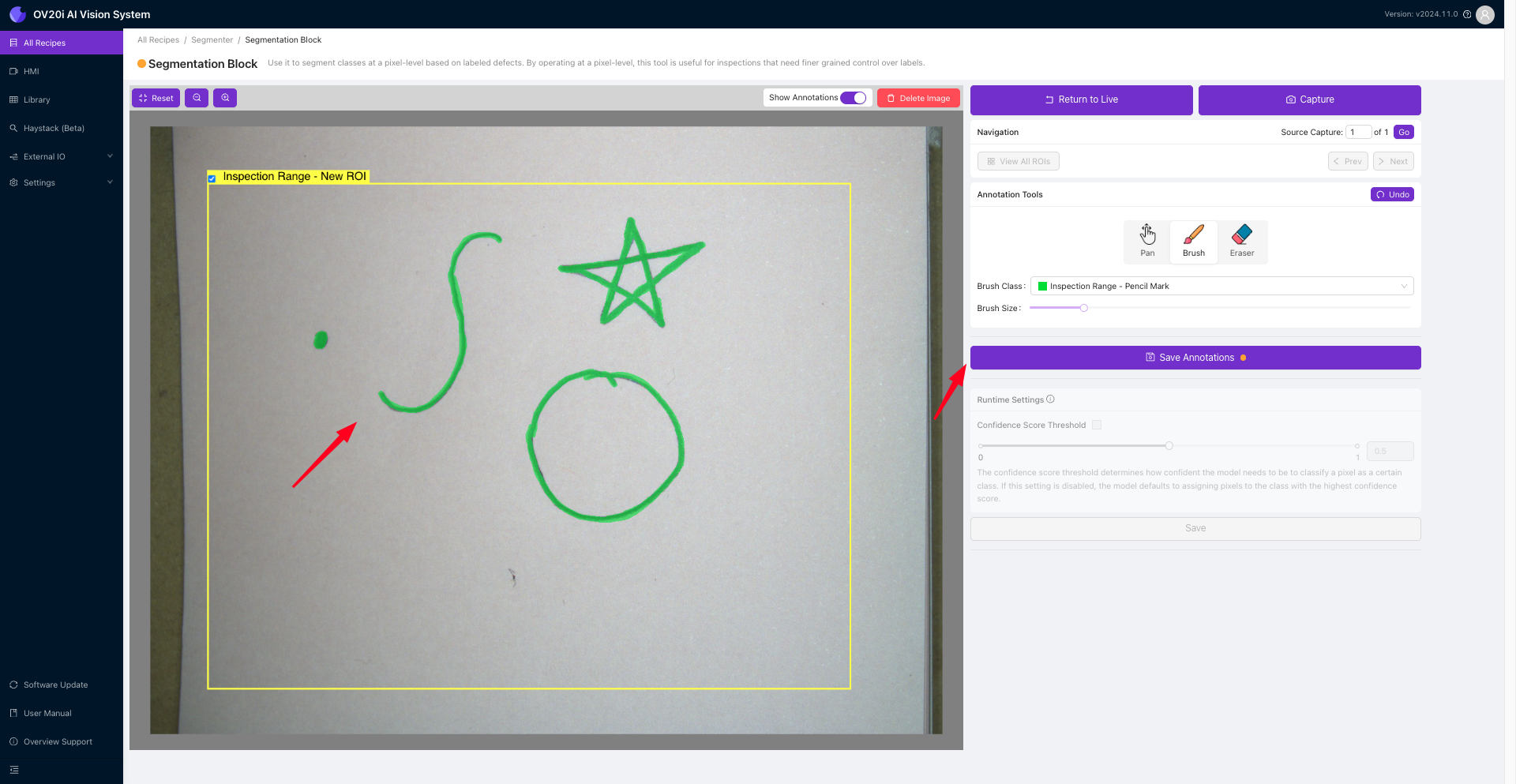

Under Inspection Types, click Edit to rename the class as “Pencil Mark”. You can also change the color associated with the class.

.png)

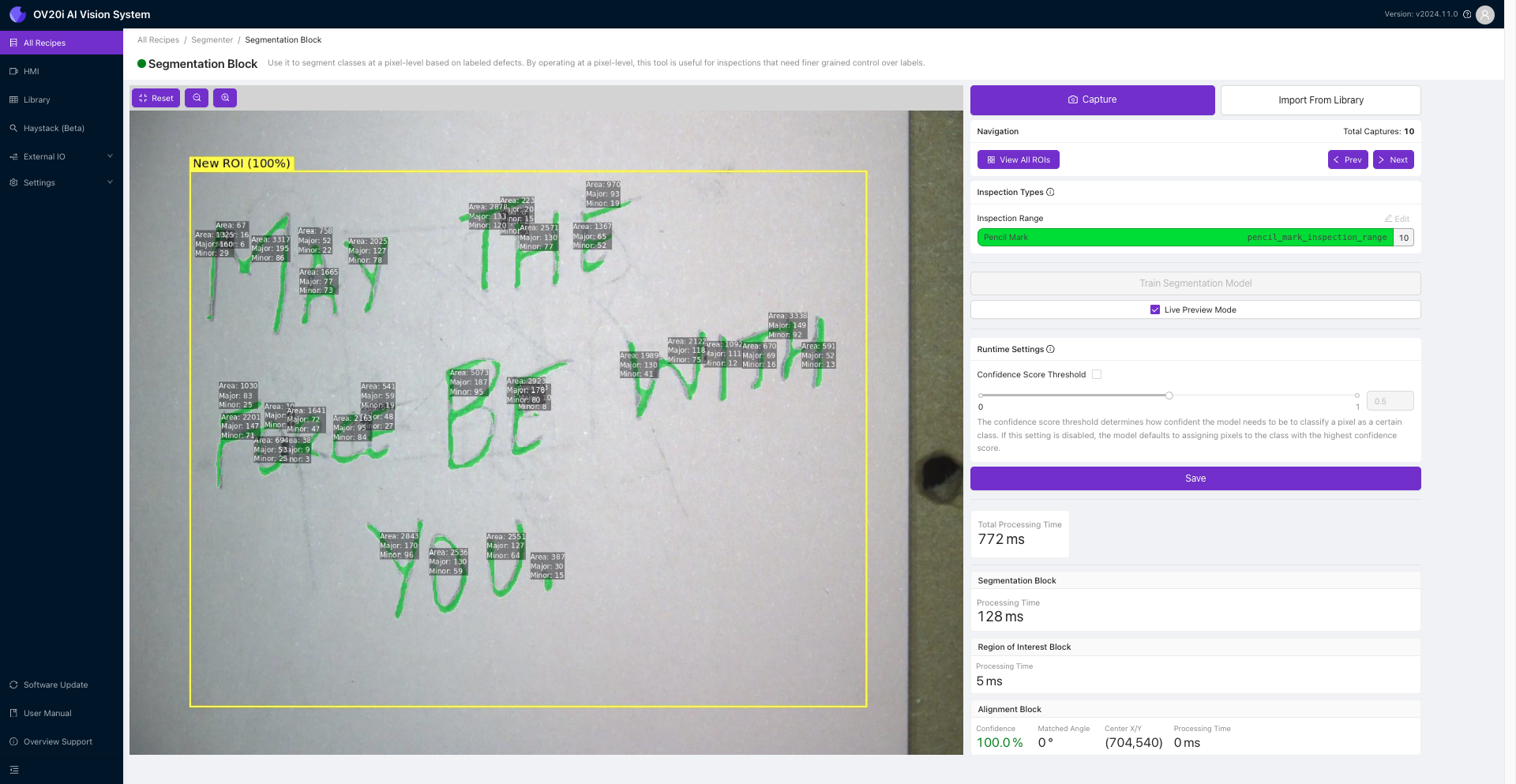

Take a minimum of 10 images of the sheet with different pencil markings on it. Use the Brush tool to trace over the pencil marks in each image. Make sure to paint only the pencil marks and nothing else. Click Save Annotations and repeat.

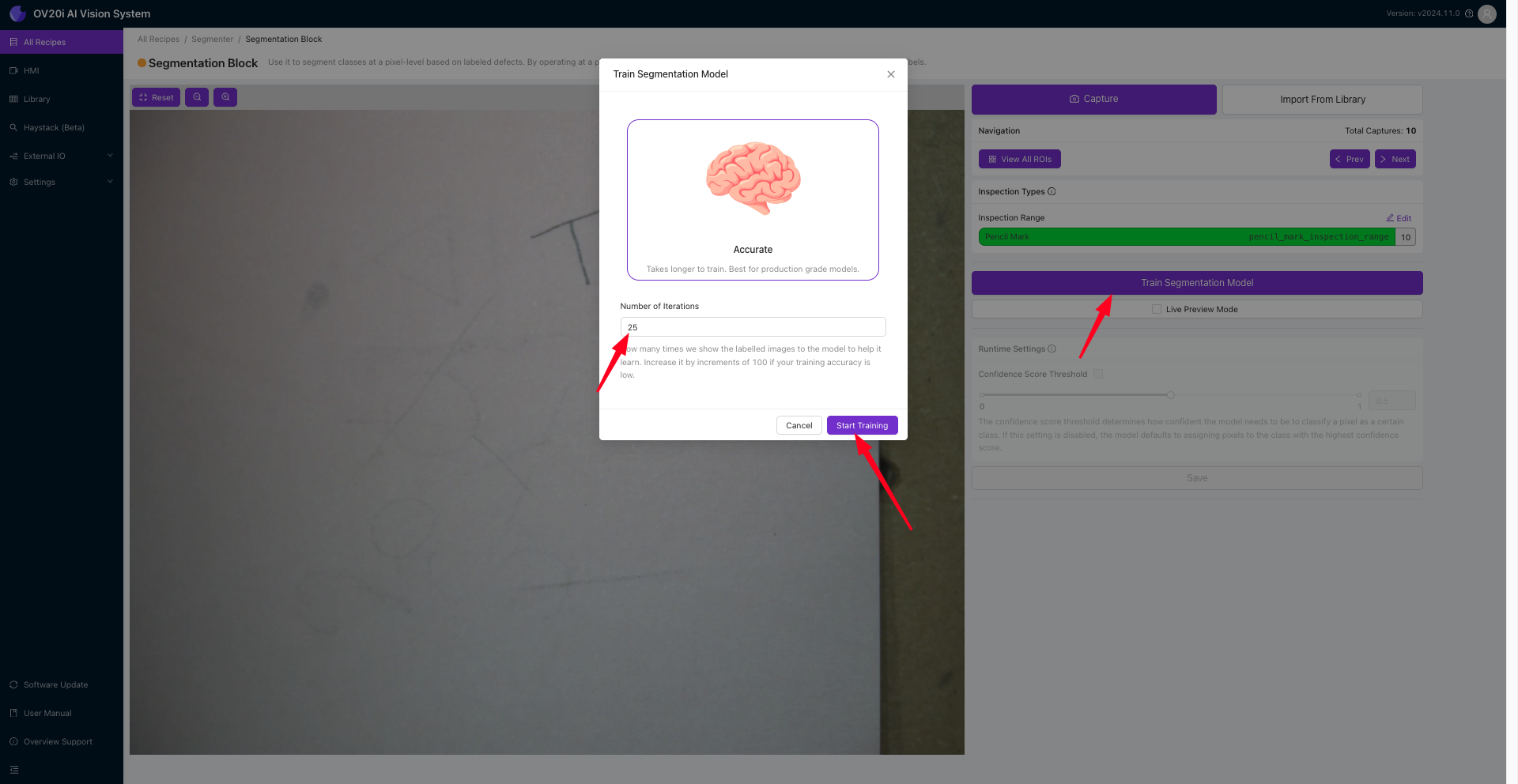

Once you have labeled at least 10 images, it's essential to double-check them to ensure that everything is correctly labeled. Once you've verified everything, click Return to Live and then Train Segmentation Model. Enter the Number of Iterations you want to be shown to the model. Keep in mind that the more Iterations you choose to show the model, the better the model's accuracy will be. However, more Iterations will take longer to train the model.

It's important to balance the need for accuracy with the amount of time you have available for training the model. Once you've selected the appropriate settings, hit the Start Training button to begin the training process. The system will start training the model, and you can monitor its progress and make any necessary adjustments as needed.

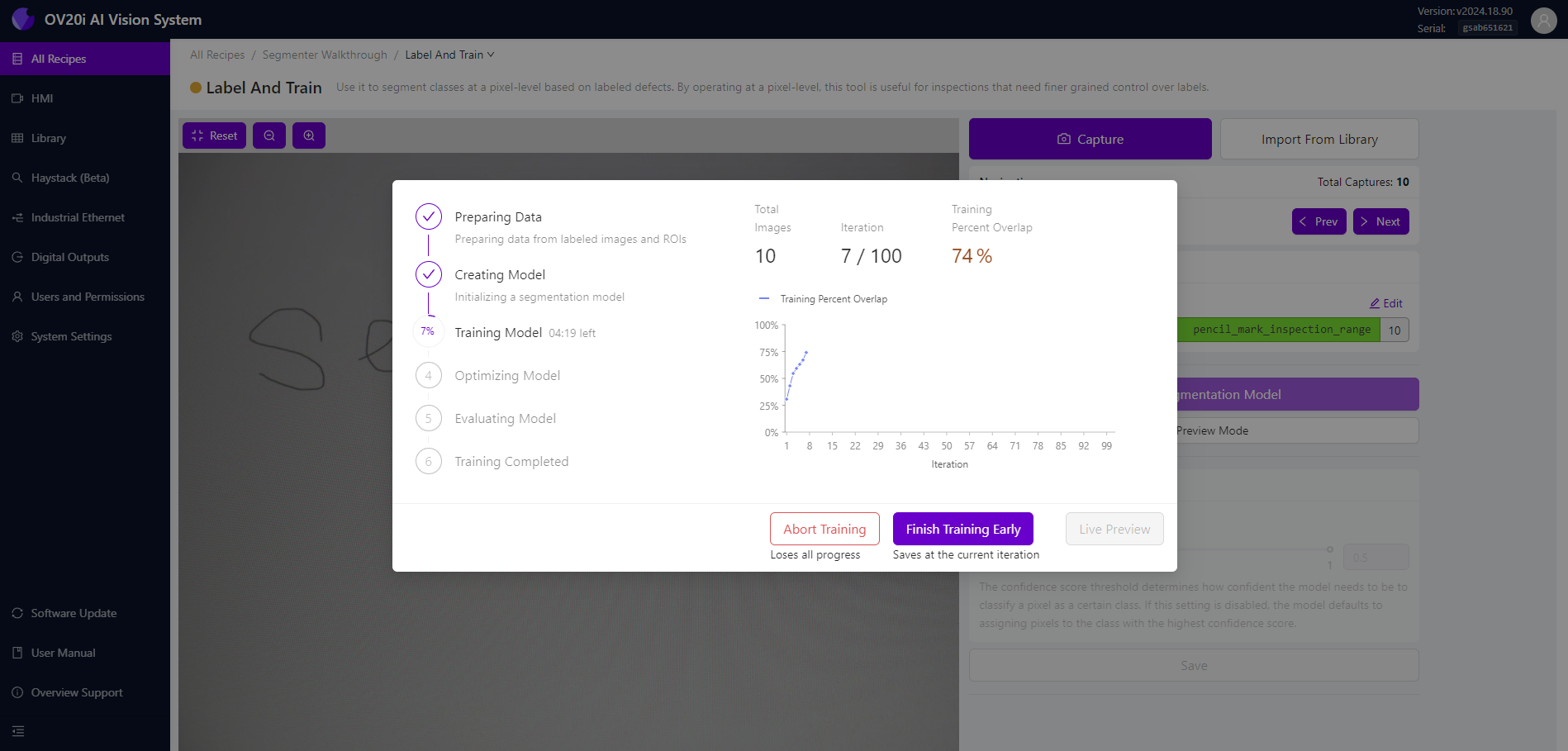

After clicking on the Start Training button, a model training progress modal will be displayed. Here, you can see the current Iteration number and the accuracy value. If you need to stop the training for any reason, click the Abort Training button. If the training accuracy is already sufficient, you can finish the training early by clicking on the Finish Training Early button.

Note

The training will finish automatically if the training accuracy is met.

Once the training is complete, you can check the training accuracy and evaluate the model's performance on the validation data. If you're satisfied with the results, you can save the model and use it for your analysis. If not, you can go back and adjust the settings as needed and retrain the model until you're satisfied with the performance.

Once the training is completed, click Live Preview to view the live preview of the trained model highlighting the pencil marks. Congratulations! You have trained your first segmentation model.

Configure pass/fail logic

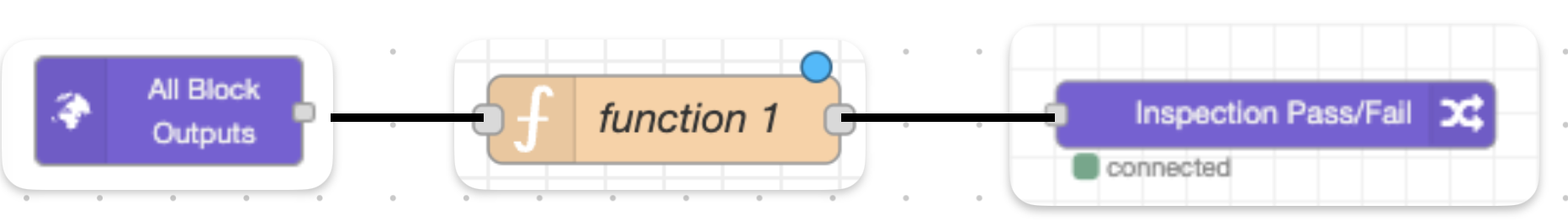

In the steps below, we’ll walk you through configuring pass/fail logic for a segmentation recipe using Node-RED logic.

Note

Ensure all the AI Blocks are trained (green) before editing the IO Block.

Navigate to IO Block using the breadcrumb menu or select Configure I/O from the Recipe Editor page.

Delete the Classification Block Logic and build the following Node-RED flow by dragging in nodes from the left-hand side and connecting them.

Double-click the function 1 node, then copy and paste the desired example code from the sections below into the On Message tab.

.png)

Click Done.

Click Deploy.

Navigate to the HMI and test your logic.

Code samples

Pass if no pixels are detected

Copy and paste the following logic:

const allBlobs = msg.payload.segmentation.blobs; // Extract the blobs from the payload's segmentation data

const results = allBlobs.length < 1; // Check if there are no blobs and store the result (true or false)

msg.payload = results; // Set the payload to the result of the check

return msg; // Return the modified message objectPass if all blobs detected are smaller than the defined threshold

Copy and paste the following logic:

const threshold = 500; // Define the threshold value for pixel count

const allBlobs = msg.payload.segmentation.blobs; // Extract the blobs from the payload's segmentation data

const allUnderThreshold = allBlobs.every(blob => blob.pixel_count < threshold); // Check if all blobs have a pixel count less than the threshold

msg.payload = allUnderThreshold; // Set the payload to the result of the check

return msg; // Return the modified message objectPass if the total number of pixels detected is less than the defined threshold

Copy and paste the following logic:

const threshold = 5000; // Define the threshold value for the total pixel count

const allBlobs = msg.payload.segmentation.blobs; // Extract the blobs from the payload's segmentation data

const totalArea = allBlobs.reduce((sum, blob) => sum + blob.pixel_count, 0); // Calculate the total pixel count of all blobs

msg.payload = totalArea < threshold; // Set the payload to true if the total area is less than the threshold, otherwise false

return msg; // Return the modified message object